How I Use AI to Show Up Better for Customers

Customer Success is a thinking role. The hard part isn't sending emails or taking notes. It's understanding messy signals, seeing risk early, and showing up to conversations prepared enough to be useful.

This isn't a post about automating relationships. I don't believe in that. This is about using AI as a force multiplier for judgment, preparation, and follow-through.

What AI is good at vs. what humans are for

AI is very good at ingesting large amounts of context, pattern matching across messy inputs, drafting and restructuring thoughts, and acting as a second brain that never gets tired.

AI is bad at accountability, taste, earning trust, knowing when silence matters, and making the final call.

So I use AI everywhere I want leverage. And nowhere I need ownership.

The mistake I see is people trying to replace the human parts of CS instead of strengthening them.

How I actually use AI

These aren't experiments. These are things I use every week.

Pre-meeting preparation that changes the conversation

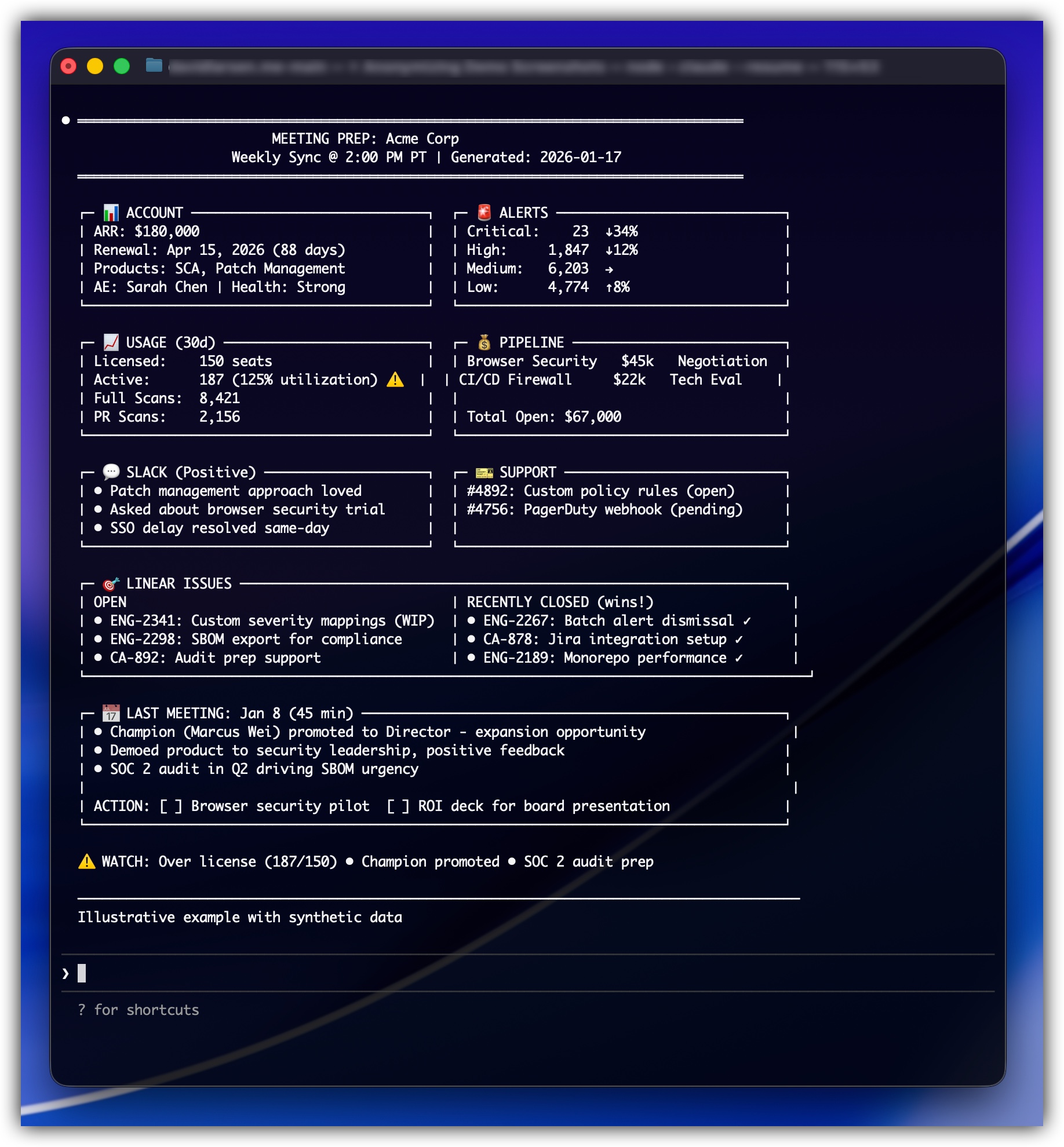

Illustrative example with synthetic data

Before important customer calls, I use AI to synthesize context I already have but don't have time to fully re-load: past notes and transcripts, support history, product usage patterns, open bugs or feature requests, renewal timing and account changes.

I use different models for different jobs. Some are better at retrieval and assembly. Others are stronger at synthesis or surfacing tensions in the data. The AI assembles context and surfaces questions worth asking. It doesn't replace my judgment about which ones actually matter.

The output I want is not a summary. A summary tells me what happened. I care about what changed and what it implies. The result is a structured briefing, not prose: what's different since the last meaningful conversation, where there might be risk I haven't named yet, and what questions are worth asking now.

I've shared a sanitized example of what one of these AI-assisted meeting briefs looks like here: customer-success-playbooks.

The result: I show up asking better questions sooner. Customers feel like I'm paying attention. Because I am.

Post-meeting synthesis

After calls, I use AI to turn raw notes into clear takeaways, explicit risks, open decisions, and follow-ups that actually matter. I'm not pasting AI output into a CRM and calling it a day. I'm using it to pressure-test my own understanding.

If the synthesis feels fuzzy, that's a signal I didn't get clarity on the call. Fewer dropped threads. Cleaner follow-ups. Less "wait, didn't we already talk about this?"

Risk thinking and churn prevention

This is one of the highest-leverage uses.

I use AI to help me reason about risk across multiple dimensions at once: engagement drift, product usage that looks "fine" but is shallow, unresolved technical friction, changes in stakeholder behavior, silence where there used to be noise.

AI doesn't decide if an account is at risk. I do. But it's very good at surfacing weak signals I might rationalize away. This is where intuition becomes a system instead of a gut feeling. And problems get addressed earlier, when they're still fixable.

Internal leverage

A lot of Customer Success impact is internal. I use AI to draft clear internal updates, turn messy thinking into structured proposals, sanity-check arguments before I bring them to leadership, and improve bug reports and feature requests so engineering can actually act.

One underrated move: having one AI critique or validate another's output. I use this for high-stakes or ambiguous work. Executive summaries, proposals where the framing matters, anything where I need to catch blind spots. The goal isn't perfection; I stop when the output is clear enough to explain and act on.

Faster internal decisions mean fewer customer stalls.

Building small tools instead of buying big ones

When I need something specific and tactical, I don't default to buying software. I've used AI to help me build internal tools that pull data from systems we already use, automate annoying but necessary workflows, and surface information exactly where the team needs it.

These are small, focused tools built to support real team workflows. Not side projects. This avoids months of vendor evals and shelfware. More time solving real problems, less time lost to tooling overhead.

Where I deliberately do not use AI

This part matters more than people admit.

I do not use AI to write messages that carry accountability or apology, deliver difficult feedback, make renewal decisions, decide priorities when trade-offs are real, or replace real listening on calls.

If the moment requires judgment, ownership, or trust, AI stays out of it.

I'll draft with AI. I'll think with AI. But I won't outsource responsibility.

A note on data handling

In enterprise CS, how you handle customer data matters. I avoid putting raw customer data into AI tools where possible. I prefer working with abstractions, anonymized summaries, or recreating issues locally when I need to troubleshoot.

My rule of thumb is simple: if I wouldn't be comfortable explaining this AI use to our security team or to a customer, I don't do it. This isn't about checking compliance boxes. It's about maintaining the trust that makes the work possible in the first place.

The trade-offs

There are real risks here. You can over-delegate thinking. You can mistake fluency for understanding. You can move faster in the wrong direction.

AI works best for me when I understand the problem well enough to disagree with the output. If I can't push back on what it gives me, I'm not ready to use it.

AI amplifies whatever you bring to it. If your instincts are weak, it will make that worse.

Used well, though, it gives you something rare in CS: time and mental space to think clearly.

Closing thought

Customer Success doesn't fail because people don't care. It fails because people are overloaded and reactive.

AI helps me be more intentional. Not louder. Not faster. More precise.

That's how you prevent churn. That's how you earn trust. That's how I think about Customer Success.